DISCUSSING UNBLINDED RESULTS

First, thanks to Garry and all thirteen composers for their hard work.

Since the library

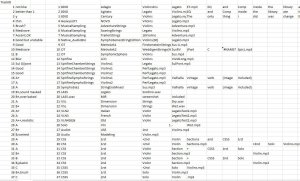

I like the most received the highest possible marks from all the people who listened through all 78 examples, it would be easy to point at this competition as proof that "It's the best."

However, I do agree with previous posts that the competition had two weaknesses. First, the libraries are completely at the mercy of the user to demo them well, and second, multiple entries didn't necessarily lead to unfair grading but it did give that library more chances to catch people's attention when they were skimming through all 78. I think in future competitions of this type, the organizer should choose just one example for each library and should exercise their judgement to pick the best option out of however many are submitted.

However, all I can really do is note those two issues in passing and move on.

I think there were 5 results of the competition that really surprised me or are worth remarking on:

1.

Cinematic Studio Strings received consistently top grades from every reviewer. It's possible that publishing my own grades early on drew attention to those tracks, but I think everyone was just impressed by those recordings. What makes the library stand out is not just the quality of the individual samples but the effort & insight in the programming that connects them. Because of that, CSS can pass most of Saxer's obstacle course with no sweat, especially obstacles that challenged many other libraries like the repeated-note test, the runs test (marcato legato), and the long/short mixture test. There were few obstacles where other libraries consistently beat CSS. Perhaps on example D, the repeated transition test, there were a few libraries that sounded more natural. Compared to CSS, Cinematic Studio

Solo Strings received a

significantly less positive response and wasn't a standout among the solo-violin entries at all. However, CSSS+CSS still achieved top grades when blended together.

2. All graders exhibited a strong tendency to give low grades to the entries that ended up being not true-legato demos at all, but "sus patch" demos. Composer #1 for instance gave us several recordings of libraries that were never designed to be able to play Saxer's passages. This shows that true legato sampling does create an audible difference... not exactly a lightning bolt revelation but still interesting to prove empirically.

3. Most of the time, the

standard deviation of the 5 grade lists was low. That means the reviewers generally agreed with each other. The tracks with the most significant disagreement were the

Virharmonic Bohemian Violin and several of the tracks (from different composers, even!) demonstrating

Spitfire Chamber Strings. For those two libraries, A's and C's were handed out in equal measure!

4.

Chris Hein's strings received higher - and more consistent - grades than you would expect from the little discussion & hype on VI-Control. Across the six tracks he submitted, the five reviewers gave Chris's tracks just one C, seventeen B's and twelve A's. Overall, his library received the highest scores for non-solo strings if you take out CSS, with Hollywood Strings coming close behind.

5.

The dog that didn't bark in this competition, is how many of the high priced, true-legato libraries from the 2010-2016 era received very middling or even low marks. It would be very unfair to single out any

individual library since the grades were so totally dependent on how capable the user was and how much time they took to create these demos. However, there were a lot of "flagship" string libraries that received solid B's or even low B's across the board.