Dewdman42

Senior Member

Harmor has resynthesis but I don't know if I would say the result is indistinguishable. its a start towards something you can mangle as a synth really.

I wrote a magazine article about resyntheis for a high tech music store I was working for around 1990 and have been waiting for tech to catch up to maybe do it someday ever since..but the simple truth is the resynthesis of acoustic instruments would take orders of magitude more data and CPU demands then samples..for a marginal return really. In 1990 it seemed more interesting because sample technology was nowhere close to what it is today. Today I think sample tech is quite good...to move beyond that with resynthesis will require a lot more computing resources then people realize.

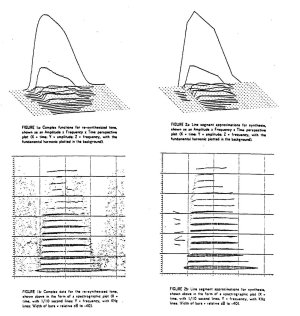

For example, for an acoustic instrument, has a timbre that changes over time. If you use additive synthesis, which is probably the most "generic" way to resynthesize, then it could take dozens or maybe hundreds of partials to create the resynthesized sound...but if that sound changes over time, then you'd be talking about dozens or hundreds of data points...potentially on a per rendered sample basis...with way more data and cpu demand then samples. Might not be necessary o add up partials once per rendered sample, might be able to use interpolation and other tricks to spread out the partial intervals, that end up rendering sample rate output...... Hard to say, but to do this effectively to sound like real instruments, you still basically would be sampling the input to get this same kind of data...jsut to resyntheize it again...and not really save anything in terms of hardware demands...

So what is the point? Good question, and probably why nobody has done it. The only advantage I can think of is the potential to modify in real time the way the partials are combined, for less of a "sampled" sound...however, the reality is that this would not be a very intuitive process to figure out how to do that exactly... possibly...would probably lead to unrealistic acoustic instrument results.

In my opinion when you look at other kinds of modeling like Pianoteq and other things..they are doing similar things, but rather then "generically" resynthesizing partials, they use very focused algorithms which can be optimized for the particular instrument they are modeling. Thus we see many products already which can do that and are getting better every day.

I wrote a magazine article about resyntheis for a high tech music store I was working for around 1990 and have been waiting for tech to catch up to maybe do it someday ever since..but the simple truth is the resynthesis of acoustic instruments would take orders of magitude more data and CPU demands then samples..for a marginal return really. In 1990 it seemed more interesting because sample technology was nowhere close to what it is today. Today I think sample tech is quite good...to move beyond that with resynthesis will require a lot more computing resources then people realize.

For example, for an acoustic instrument, has a timbre that changes over time. If you use additive synthesis, which is probably the most "generic" way to resynthesize, then it could take dozens or maybe hundreds of partials to create the resynthesized sound...but if that sound changes over time, then you'd be talking about dozens or hundreds of data points...potentially on a per rendered sample basis...with way more data and cpu demand then samples. Might not be necessary o add up partials once per rendered sample, might be able to use interpolation and other tricks to spread out the partial intervals, that end up rendering sample rate output...... Hard to say, but to do this effectively to sound like real instruments, you still basically would be sampling the input to get this same kind of data...jsut to resyntheize it again...and not really save anything in terms of hardware demands...

So what is the point? Good question, and probably why nobody has done it. The only advantage I can think of is the potential to modify in real time the way the partials are combined, for less of a "sampled" sound...however, the reality is that this would not be a very intuitive process to figure out how to do that exactly... possibly...would probably lead to unrealistic acoustic instrument results.

In my opinion when you look at other kinds of modeling like Pianoteq and other things..they are doing similar things, but rather then "generically" resynthesizing partials, they use very focused algorithms which can be optimized for the particular instrument they are modeling. Thus we see many products already which can do that and are getting better every day.

) among other things about spectral morphing and additive resynthesis on

) among other things about spectral morphing and additive resynthesis on