NekujaK

I didn't go to film school, I went to films -QT

Email sent to Recording Academy members...

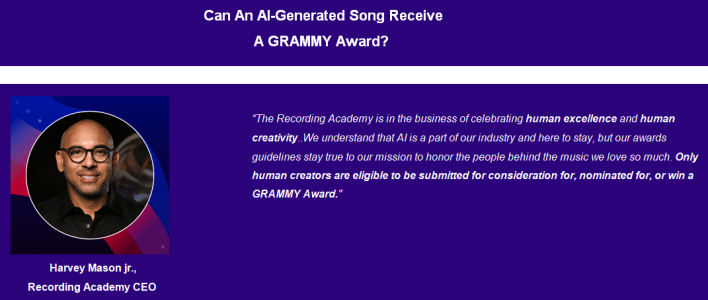

In the ever-evolving landscape of music, the rise of generative artificial intelligence (AI) brings with it an exciting frontier — not as a replacement for human creativity, but as a partner enhancing the musical experience.

Together, human ingenuity and AI can create symphonies that were previously unimaginable, transcending traditional boundaries and introducing a new era of expression.

To ensure this future benefits all creators, adequate legislation must be implemented to foster a thriving ecosystem where technology amplifies, and does not replace, creativity and artists.

Join us in shaping a future where humanity and technology play in concert.

1. There are no safeguards in place at the federal level to protect an artist’s voice, image, name, or likeness from AI exploitation.

2. Over 170 million AI-generated tracks have been created so far, totaling over 970 years of constant listening.

3. The generative AI music market is expected to be valued at $2.6 billion by 2032.

4. AI music generators have used copyrighted songs and lyrics without authorization from artists, songwriters, music publishers, or record labels to train their data models.

5. The Recording Academy spearheaded a collective effort to combat AI fraud in Tennessee with the passing of the ELVIS Act, the first law to protect human creativity at the state level.

6. Federal legislation, like the No AI FRAUD Act, is imperative to protect human music makers.

7. Used responsibly, AI can contribute to amazing creative opportunities and enhance human artistry.

This week at GRAMMYs on the Hill, the Recording Academy will share AI concerns with lawmakers on Capitol Hill during Advocacy Day and at the inaugural Future Forum where panel discussions will explore the impacts of artificial intelligence on our community.

We’ll be advocating for YOU on Capitol Hill, but we need your support from home, on the road, or wherever you are!

Get Ready To Protect Human Artistry On Advocacy Day, May 1!

You can support the cause by contacting your local representatives, amplifying our efforts on social media, exploring our AI resource hub to deepen your understanding of the issues, and more.

How much do you actually

know about AI in music?

know about AI in music?

In the ever-evolving landscape of music, the rise of generative artificial intelligence (AI) brings with it an exciting frontier — not as a replacement for human creativity, but as a partner enhancing the musical experience.

Together, human ingenuity and AI can create symphonies that were previously unimaginable, transcending traditional boundaries and introducing a new era of expression.

To ensure this future benefits all creators, adequate legislation must be implemented to foster a thriving ecosystem where technology amplifies, and does not replace, creativity and artists.

Join us in shaping a future where humanity and technology play in concert.

1. There are no safeguards in place at the federal level to protect an artist’s voice, image, name, or likeness from AI exploitation.

2. Over 170 million AI-generated tracks have been created so far, totaling over 970 years of constant listening.

3. The generative AI music market is expected to be valued at $2.6 billion by 2032.

4. AI music generators have used copyrighted songs and lyrics without authorization from artists, songwriters, music publishers, or record labels to train their data models.

5. The Recording Academy spearheaded a collective effort to combat AI fraud in Tennessee with the passing of the ELVIS Act, the first law to protect human creativity at the state level.

6. Federal legislation, like the No AI FRAUD Act, is imperative to protect human music makers.

7. Used responsibly, AI can contribute to amazing creative opportunities and enhance human artistry.

This week at GRAMMYs on the Hill, the Recording Academy will share AI concerns with lawmakers on Capitol Hill during Advocacy Day and at the inaugural Future Forum where panel discussions will explore the impacts of artificial intelligence on our community.

We’ll be advocating for YOU on Capitol Hill, but we need your support from home, on the road, or wherever you are!

Get Ready To Protect Human Artistry On Advocacy Day, May 1!

You can support the cause by contacting your local representatives, amplifying our efforts on social media, exploring our AI resource hub to deepen your understanding of the issues, and more.