Hi

@lettucehat,

Here's what I'm doing right now:

One VEP instance per library. So I have an instance called 'VEP CineBrass'.

In there, I have one Kontakt multi per instrument. So, I've got the following multis (each on its own track in VEPro) for CineBrass:

- CB Solo Tpt (port 1)

- CB Tpt Ens (port 2)

- CB Solo Horn (port 3)

- CB Hn Ens (port 4)

- CB Solo Tbn (port 5)

- CB Tbn Ens (port 6)

- ... you get the idea

Each of those multis is on a separate port, so I can have up to 16 MIDI channels per Kontakt multi.

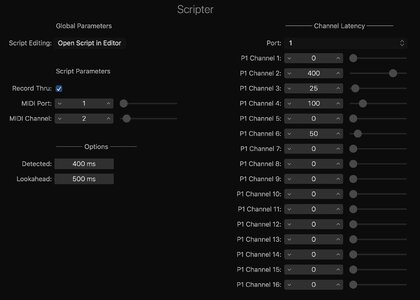

In Logic Environment, I have an Instrument channel strip with the VEP AU3 plugin. I clone this XX times, and change the port number for as many I need. I think I have 9 ports for CineBrass. Each channel strip clone is set to a different port, which is mapped to the corresponding multi in VEP - CB Solo Tpt, CB Solo Hn, etc.

Also in the Environment, I have a MIDI Instrument for each articulation - e.g. CB Solo Tpt Legato, CB Solo Tpt Longs, CB Solo Tpt Shorts. These MIDI instruments point to the corresponding Instrument channel strip.

Finally, in Tracks view, I have a bunch of External MIDI tracks that I 'reassigned' to each of the MIDI Instruments. I use articulations on these to swtch between long, marcato, trill, trem, etc. But this is where my plan fell to pieces... I was going to put negative delay on each of these MIDI tracks (one per articulation), but Logic simply doesn't recognize it. BIG bummer.

To get around this, in VEPro, I set up multiple outputs in each Kontakt instrument. So in CB Solo Tpt, I have legatos going to st.1 (1/2), longs going to st.2 (3/4), and shorts going to st.3 (5/6). In VEPro, I hit the plus button to create two additional audio outputs that correspond to the Kontakt outs. These 3 audio outs are where I put the Expert Sleeper Latency plugin. All audio in each VEPro instance still sums to a single stereo return that feeds back into Logic. You could obviously use multi-out if you wanted.

Still not sure this is all going to work... I just finished setting up all the instruments today and need to do some stress testing with an actual track. If it doesn't work, I'm seriously thinking of switching to Cubase.

PS - Haven't had a chance to test Dewdman's script yet. Need to establish a baseline first.

Hope this helps,

Marc