You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Core Utilization

- Thread starter Ashermusic

- Start date

Dewdman42

Senior Member

@Ashermusic your results are right in line with all the perf testing I did a few months ago. LogicPro kicks everyone's ass on CPU use on the mac. It even beat VEP when comparing the same project played directly through LogicPro vs using VEP for the same tracks.

@Ashermusic your results are right in line with all the perf testing I did a few months ago. LogicPro kicks everyone's ass on CPU use on the mac. It even beat VEP when comparing the same project played directly through LogicPro vs using VEP for the same tracks.

Not my results though, a guy on Gearslutz did it.

Oh, and specifically:

Running on 2018 MacBook Pro, i9, 32gig ram.

Current versions (Sep 1, 2019) of MacOS 10.14.6

Current versions (Sep 1, 2019) of Logic, ProTools, StudioOne, Cubase.

Current versions (Sep 1, 2019) of all plugins, iZotope, Native Instruments, Superior Drummer3

25 track session built in each DAW using just 3rd party plugins.

Buffer 128

5 Audio tracks with Nectar 3

5 Audio tracks with Neutron 3

10 Instrument tracks with Kontakt

5 Instrument tracks with Superior Drummer 3

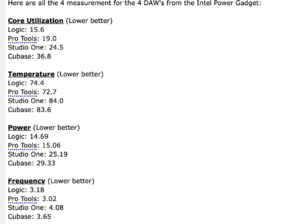

Intel Power Gadget shows Core Utilization:

Running on 2018 MacBook Pro, i9, 32gig ram.

Current versions (Sep 1, 2019) of MacOS 10.14.6

Current versions (Sep 1, 2019) of Logic, ProTools, StudioOne, Cubase.

Current versions (Sep 1, 2019) of all plugins, iZotope, Native Instruments, Superior Drummer3

25 track session built in each DAW using just 3rd party plugins.

Buffer 128

5 Audio tracks with Nectar 3

5 Audio tracks with Neutron 3

10 Instrument tracks with Kontakt

5 Instrument tracks with Superior Drummer 3

Intel Power Gadget shows Core Utilization:

rgames

Collapsing the Wavefunction

I'm not sure what to make of those measurements because <drumroll> all the DAWs appear to work. What impact do they have on workflow and productivity? None that I can discern.

A marginally meaningful benchmark would be something like min latency achievable without dropouts. A truly meaningful benchmark is something like number of minutes of music produced per day. If the project runs fine, who cares what the CPU usage is?

My washing machine runs at about 80% of its capacity. If I went out and bought a giant industrial washing machine I could get it down to something like 20%. But why would I do that if the current one does just fine at 80%? I wouldn't. I don't care what the usage is as long as it works.

Maybe heat and power consumption are an issue. But if you run those for eight hours a day over the course of a month you're looking at something like 5 cents difference. Not enough to matter unless you're running hundreds of machines. 5 cents is way down in the noise in monthly power usage.

As of 8-10 years ago DAWs started hitting real-time bottlenecks before CPU bottlenecks. So that's where the meaningful benchmarks are. But even then the distinctions are mostly meaningless these days. Even real-time performance is good enough that it's not an issue that often.

rgames

A marginally meaningful benchmark would be something like min latency achievable without dropouts. A truly meaningful benchmark is something like number of minutes of music produced per day. If the project runs fine, who cares what the CPU usage is?

My washing machine runs at about 80% of its capacity. If I went out and bought a giant industrial washing machine I could get it down to something like 20%. But why would I do that if the current one does just fine at 80%? I wouldn't. I don't care what the usage is as long as it works.

Maybe heat and power consumption are an issue. But if you run those for eight hours a day over the course of a month you're looking at something like 5 cents difference. Not enough to matter unless you're running hundreds of machines. 5 cents is way down in the noise in monthly power usage.

As of 8-10 years ago DAWs started hitting real-time bottlenecks before CPU bottlenecks. So that's where the meaningful benchmarks are. But even then the distinctions are mostly meaningless these days. Even real-time performance is good enough that it's not an issue that often.

rgames

Richard, long time, no see. For me it's simple in terms of performance. The more software instruments and FX I can run without issues, the better the DAW. The fewer, the worse the DAW.

But of course then there is workflow, etc.

But of course then there is workflow, etc.

Dewdman42

Senior Member

Both aspects are interesting. I feel that latency performance is more a measurement of specific hardware then it is the DAW being used. If you want to compare hardware to other hardware, then you need a baseline constant, which would be to compare different hardware on the same DAW. if you want to compare DAW's, then you need a baseline constant of the hardware and everything else being consistent.

All of the DAW's can go to low latency and generally will isolate a live track to a single core, so what your machine can do on a single core with the audio card and drivers that you are using (and DPC issues, etc) will largely determine how small you can make the buffer and thus the latency you will get. Simple as that. While its somewhat interesting to hear what other people are getting out of their hardware, its largely useless information unless you're planning to shop for a new computer and you want to compare different systems.

But if we want to compare DAW's... then first of all you have to make the comparison with as many factors exactly the same as possible, including the hardware. In that light, my 5,1 MacPro with a slow single core is not going to get very low latency compared to some other systems...no matter which DAW I run. Well, sorta, I get pretty darn low latency when I use my $1000 lynx card together with my $1000 x32 mixer over AES50. So that hardware has a lot to say in that conversation about latency also... But I am sure that if I had a newer mac with faster single core performance I would be able to go to the smallest buffer sizes without crackles. Its all about the hardware really.

I feel the DAW you choose has very little impact on this aspect... But it would be interesting for sure to see a test where one system is used, with everything else the same except for which DAW is being used and measure how low the latency can get in each case.

But overall mix performance is also interesting, which gets into multi-core utilization. The DAW's also have GUI operations, some are more efficient than others. If you're running HiDPI, it can make a difference. Overall multi-core utilization is going to put more emphasis on comparing DAW's to each other in terms of how efficiently they are using the Operating System services compehensively with lots of plugins and tracks and Graphics and spoon feeding it to the sound card.

When I tested that a few months ago, there were large differences between some DAW's. Cubase10.0.20 was really really bad performance compared to everything else, it could not even play 100 tracks, where only a few dozen were even playing at a time ever. Steinberg fixed something in 10.0.30 that brought it in closer to what StudioOne, Reaper and DP were doing. LogicPro, however, performed way ahead of all of them in this area. Reaper was not the leader at all by the way. Maybe windows would be different.

I did not do a test for low latency comparisons, I wanted to specifically see what each of them could do and Cubase 10.0.20 could not even complete the task with a very large buffer much less a small buffer. in order to keep the comparison between DAW's the same I used the same large buffer for all of them.

But an equally interesting test would be to see how low the buffer can go before drop outs start to happen.

All of the DAW's can go to low latency and generally will isolate a live track to a single core, so what your machine can do on a single core with the audio card and drivers that you are using (and DPC issues, etc) will largely determine how small you can make the buffer and thus the latency you will get. Simple as that. While its somewhat interesting to hear what other people are getting out of their hardware, its largely useless information unless you're planning to shop for a new computer and you want to compare different systems.

But if we want to compare DAW's... then first of all you have to make the comparison with as many factors exactly the same as possible, including the hardware. In that light, my 5,1 MacPro with a slow single core is not going to get very low latency compared to some other systems...no matter which DAW I run. Well, sorta, I get pretty darn low latency when I use my $1000 lynx card together with my $1000 x32 mixer over AES50. So that hardware has a lot to say in that conversation about latency also... But I am sure that if I had a newer mac with faster single core performance I would be able to go to the smallest buffer sizes without crackles. Its all about the hardware really.

I feel the DAW you choose has very little impact on this aspect... But it would be interesting for sure to see a test where one system is used, with everything else the same except for which DAW is being used and measure how low the latency can get in each case.

But overall mix performance is also interesting, which gets into multi-core utilization. The DAW's also have GUI operations, some are more efficient than others. If you're running HiDPI, it can make a difference. Overall multi-core utilization is going to put more emphasis on comparing DAW's to each other in terms of how efficiently they are using the Operating System services compehensively with lots of plugins and tracks and Graphics and spoon feeding it to the sound card.

When I tested that a few months ago, there were large differences between some DAW's. Cubase10.0.20 was really really bad performance compared to everything else, it could not even play 100 tracks, where only a few dozen were even playing at a time ever. Steinberg fixed something in 10.0.30 that brought it in closer to what StudioOne, Reaper and DP were doing. LogicPro, however, performed way ahead of all of them in this area. Reaper was not the leader at all by the way. Maybe windows would be different.

I did not do a test for low latency comparisons, I wanted to specifically see what each of them could do and Cubase 10.0.20 could not even complete the task with a very large buffer much less a small buffer. in order to keep the comparison between DAW's the same I used the same large buffer for all of them.

But an equally interesting test would be to see how low the buffer can go before drop outs start to happen.

rgames

Collapsing the Wavefunction

Of course. What I'm saying is those metrics are not related to the measurements above. Where in the data above do we see the point where the CPU usage gets to 100% and you can't add any more effects? We don't - that point is not demonstrated. You're assuming that the higher CPU usage will result in fewer FX and instruments IF you were to get to that point. But that point is not demonstrated and, more importantly, that assumption is (almost always) incorrect for CPUs from the last decade or so. You hit real-time performance issues long before you run out of CPU power. So CPU power doesn't really matter any more.The more software instruments and FX I can run without issues, the better the DAW. The fewer, the worse the DAW.

In other words, the number of FX and instruments you can run will hit a real-time bottleneck with your CPU usage well below 100%. So CPU usage is not an indicator of number of FX and instruments you can run.

You can prove it to yourself: load up a bunch of FX and play a bunch of voices until you start getting dropouts. Then look at your CPU usage at the point where that happens. Is it at 100%? I bet not. It can be, but that's almost never the case for a practical project file. You're getting dropouts because you're hitting real-time performance limits. The CPU still has plenty of bandwidth left.

CPU performance and real-time performance are weakly related but are mostly different. You care about the latter, so measure that.

rgames

Dewdman42

Senior Member

That explanation does not resonate with my tests in cubase where it was unable to play a 100 track project due to poor CPU utilization.

Both factors are relavant. There is DEFINITELY a limit to how many tracks a DAW can play and they are all different..you're right another useful test would be to see how many tracks each DAW can do on one single system before it starts to crackle and pop.

The bottleneck you speak of is mainly related to live tracks only, not mixes. Your mix is not happening in real time. The real time performance that you explained so well in your video a while back is related to hardware, not the specific DAW.

Both factors are relavant. There is DEFINITELY a limit to how many tracks a DAW can play and they are all different..you're right another useful test would be to see how many tracks each DAW can do on one single system before it starts to crackle and pop.

The bottleneck you speak of is mainly related to live tracks only, not mixes. Your mix is not happening in real time. The real time performance that you explained so well in your video a while back is related to hardware, not the specific DAW.

Dewdman42

Senior Member

It is also true that a high CPU % number does not necessarily mean a problem, if the music is playing without dropouts and the fan isn't too loud...then does it matter if they CPU is at 99% compared to a different daw at a lower CPU%? No not really...but if the DAW is so inefficient with the CPU that its that much different then the other DAW, it is going to run out of breath eventually and will cause dropouts that are a direct result of either a slow CPU or of the DAW wasting CPU time doing other tasks like GUI or doing inefficient processing. Just because a CPU is not at 100% does not necessarily mean its not part of the bottleneck. The CPU is involved in shoveling audio data to the buffers and an inefficient DAW in terms of CPU can easily end up getting dropouts without the CPU at 100%.

Simply seeing one DAW run the same tracks at double the CPU usage *IS* valuable information and tells you lot.

Another test would be to increase the number of tracks until you get drop outs, with each DAW.

Simply seeing one DAW run the same tracks at double the CPU usage *IS* valuable information and tells you lot.

Another test would be to increase the number of tracks until you get drop outs, with each DAW.

rgames

Collapsing the Wavefunction

Yep. That's a meaningful metric: the point at which dropouts start to occur.it is going to run out of breath eventually and will cause dropouts

So measure when that happens and what the CPU usage is. Right now you're assuming a relationship that has not been demonstrated. And I'm saying I've tried to demonstrate that relationship and haven't been able to do so in the last decade. As I posted in my reply to Jay: assuming that higher CPU usage means fewer FX and instruments is ... well ... an assumption! I've not seen it demonstrated. Did your 100-track Cubase project run out of CPU power? If not then it proves the point that you're hitting other bottlenecks first.

I can certainly make a project that'll cause dropouts. But I can't get CPU usage anywhere near 100% when those dropouts start to occur. The single largest factor for dropouts is audio buffer size, and that drives real-time performance requirements, not CPU performance requirements.

rgames

Dewdman42

Senior Member

Did your 100-track Cubase project run out of CPU power? If not then it proves the point that you're hitting other bottlenecks first.

As stated multiple times, here and on previous forum threads...Cubase was taking double the CPU % compared to other daws and was not able to play 100 tracks, it ran out of the ability to play the project somewhere around 50-70 tracks. You say there is no correlation, but I think there is. I personally did not have time to do a more rigorous test where I attempted to get each DAW to crap out and see how many tracks each one could do before it started to crap out. Cubase crapped out around 50-70 tracks...with the CPU cranked to double the others.

I can certainly make a project that'll cause dropouts. But I can't get CPU usage anywhere near 100% when those dropouts start to occur. The single largest factor for dropouts is audio buffer size, and that drives real-time performance requirements, not CPU performance requirements.

A larger buffer size allows the DAW more breathing room to get the CPU crunching the DSP while multi tasking..until it can fill the buffer. if the buffer is small, then it should not take the CPU very long at all to fill the buffer, yet..that is when we get drop outs. That is because of the multi-tasking nature of the computer. A computer will almost never run at 100% cpu...but that doesn't mean the CPU inefficiency is irrelevant. Every time a process gets a slice of time to crunch some numbers, it needs to be efficient, If it gets enough slices of time then it can fill the audio buffer in time to send it. If it doesn't complete the task, then the audio buffer gets sent partially filled....ie dropout. With a smaller buffer, the buffer is smaller, but so is the length of time that the computer has to multi-task with various processes...so there is more possibility that the DAW will not get enough time allocated to it to fill that small buffer.

CPU efficiency absolutely matters, and the numbers posted by AshMusic are not irrelevant. Both aspects are relevant and interesting.

generally we are only interested in low latency performance while tracking in parts, and that is usually on so called "live" channels that generally will be using a single core of the computer. The computer can still be showing way less than 100% cpu utilization, yet that single core simply can't keep up with whatever time the OS allocates to the DAW for using the CPU, fast enough to fill the buffer during one Process block of time. Then you get drop out.

The hardware generally works like a robot, it takes the buffer and plays it in perfect real time. The job of filling the buffer belongs to the DAW, using the CPU.

However, the CPU gets shared by numerous processes in the OS and so that is why it can't always get it done, ESPECIALLY if the DAW is doing inefficient crap.

There is absolutely a correlation.

Dewdman42

Senior Member

also another point worth mentioning... When you look at a typical CPU% statistic in your menubar or whatever tool you want, its showing you an average. Even if you have it set to one second intervals, a computer actually does a LOT of stuff in one second. It could have periods of time during that one second where its running full tilt at 100% and you'd have no way of knowing it because it had other moments where it was sitting around waiting for I/O such as from memory for example. That's why you see an average of less then 100% pretty much always on your meters, but that does not mean there aren't critical number crunching periods where the CPU is running to its full capacity. And again, if you have a slower CPU or if the software is churning on it with inefficient programming, then you might see a higher CPU% average.. and it may or may not be a problem in a DAW.. It doesn't really matter until you get drop outs. An inefficient DAW will get them sooner then a more efficient DAW because the more-efficient DAW can get through its number crunching periods much more quickly and get the buffer filled.

And, as other users in his post were attempting to explain, its not linear. One DAW may be a hog with this load, but continue loading it with more tools then test again. Actually, test at the threshold of drop-outs as suggested above. That, at least to me, is much more meaningful.Not my results though, a guy on Gearslutz did it.

Well, with Logic and CPU usage I definitely have seen correlation over the years. I rarely get dropouts until it is really high.

rgames

Collapsing the Wavefunction

You're in luck! Because I did.I personally did not have time to do a more rigorous test

And yes, they crap out at different CPU usages. One might be 80%, another 50% and another 20%. But they all crap out below 100% usage. So unless you like staring at CPU usage graphs, who cares what the CPU usage is?

In my tests, the one that craps out at 80% CPU usage doesn't do so with significantly more FX loaded or voices playing. But maybe it does for you - but that's a meaningful metric that I don't see above: max number of FX/voices/whatever for a given CPU or DAW or whatever.

Pick a CPU type, or CPU speed or DAW or whatever and measure the point at which it no longer plays back smoothly. That's what we care about. So measure that.

Here's an example of a meaningful comparison: I have a pretty intense project that that I've used as a DAW benchmark for 7 or 8 years. I've run it on 4-core, 6-core and 10-core machines. On all three machines the lowest latency I could achieve is around 6 ms. The 4-core did 6 ms at the highest CPU usage. The 6-core had the next highest CPU usage and the 10-core had the lowest CPU usage.

Did the 10 core provide more FX than the 4-core? Nope. Did it achieve lower latency? Nope. The 10-core CPU is vastly more powerful (as evidenced by the lower CPU usage) but in terms of meaningful metrics like number of FX or voice count at dropouts all three CPUs were exactly the same.

Therefore, voice count, number of FX, etc at dropout, based on my measurements, are not related to CPU usage. QED.

rgames

Share: